During week 7, we came up with our deliverables, milestones, tasks, tall pole analysis, and mitigation. This can be found in the Intelligent Prosthetic Arm tab of my site.

Author Archives: Karissa Barbarevech

Project 2 Week 6

In week 6, we looked into possible servos for the shoulders. It was determine that DC motors would not work servo controller chosen. The decision was made to use the HS-805MG by HITEC because it is high torque with a large rang of motion. The rest of the week was spent discussing the project proposal.

Project 2 Week 5

In week 5, we began working on getting the arm and hand motions smoothed out. We realized the shoulder servos were not strong enough to lift the arm and begin considering DC motors.

Project 2 Week 4

In week 4, we discussed the additional boards needed to allow the raspberry pi to control multiple servos and read the EEG and EMG signals. The High-Precision AD/DA Board was chosen to for the EEG and EMG signals and the Adafruit 16 Channel Servo Driver was chosen to drive the servos.

Project 2 Week 3

In week 3, we made plans for going forward with using the ADlab to read EEG and EMG signals and interpreting them with the pi. We also went over different reasons for making hardware choices.

Project 2 Week 1

This week we were given project options. Christopher Gasper and I chose the Intelligent Prosthetic arm. The rest of the time was spent figuring out what supplies we needed and what microcontroller to use. We decided on the raspberry pi, which will need additional boards.

Project 2 Proposal

My Project 2 proposal can be found under the Intelligent Prosthetic Arm tab on my site.

Project 2 Week 2

Overall Project

- Why: Prosthetics improve amputees well being both physically and mentally.

- What exists: Current prosthetics have a learning curve,which requires the user to relearn how to move their appendages.

- What this project brings: This project allows the wearer to immediately use the prosthetic without having to relearn anything. The limb will move with thought processes normally used to move natural limbs.

Processor

- Why: The Raspberry Pi was chosen for its ability to control multiple servos. It also has a lot of information available online to troubleshoot.

- External Components vs internal capabilities: To control the servos, the raspberry pi requires two addition shields, but with this shields the only other thing necessary is supplying constant power to the servos.

- Speed/cushion/improvement possibilities: The database of categorized brain waves can be improved in the future to include other limbs, which can then be coded for.

Hardware Choices

- Availability: The arm was supplied by the department for the purpose of testing this project.

- Reliability: It has not yet been determined whether or not the arm is reliable, but the department has stated that their has been prior success controlling it.

- In the future, a more lifelike arm will need to be implemented, but for the purpose of initial testing we will use the arm supplied.

Final Project Update

Communication was not established between the RoboPhilo and the computer. Because this milestone had to be met before communication with the Kinect could be worked on, only one of the milestones was achieved. After troubleshooting the robot for several weeks, I exhausted all online resources for making the robot work and found out that in many cases the RoboPhilo never works.

Looking forward, I do not think it would be practical to continue this project using the included ATmega32 microcontroller to communicate with the robot. If the RoboPhilo is used in the future, I would recommend using a different microcontroller. This presents a problem of powering the individual servos so modification to the servos may have to be made depending on the microcontroller choice. The more practical solution would be to research a different robot, if the budget allows. I would also recommend that regardless of this choice, it is ensured that the robot is functional before beginning the project, otherwise the student may spend more time troubleshooting the robot than actually tuning and programming it like I did.

Once the robot is functional, Kinect communication could be established. My plan for this was to have the Kinect read the joint angles of the person in front of the camera and input these values into a motion file for the robot, which would than play back the users motions. During playback, it would also compare the values with a preexisting set of values within a certain tolerance. If the two differed by an amount greater than these tolerances, the robot would perform the preexisting motion. If the two were similar enough, the robot would perform the next exercise downloaded.

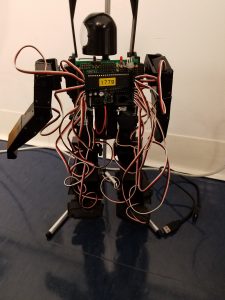

Front of Robot

Back of Robot

ATmega32

Week 7

During week 7, I worked on my project closeout. This including posting a final update on where I got with my project and where someone else can pick it up. This week also includes a final report, which will be posted on its own page. The next step in the process is to determine what project I will be working on for the rest of the academic year.