Below is most of the written work for the robot as well as the code and links to the Kinect libraries we used for reference.

Code:

using System;

using System.Collections.Generic;

using System.Linq;

using System.Text;

using System.Windows;

using System.Windows.Controls;

using System.Windows.Data;

using System.Windows.Documents;

using System.Windows.Input;

using System.Windows.Media;

using System.Windows.Media.Imaging;

using System.Windows.Navigation;

using System.Windows.Shapes;

using Microsoft.Kinect;

using System.Collections;

using System.IO.Ports;

//namespace kinect1

//{

public class kinect1

{

public kinect1()

{

InitializeComponent();

//}

#include <kdl/frames.hpp> // For KDL::Vector, KDL:Rotation, etc

XnFloat* m; // Temporary variable for accessing matrix elements

// Get torso xyz position

XnSkeletonJointPosition xn_pos_torso;

UserGenerator.GetSkeletonCap().GetSkeletonJointPosition(user, XN_SKEL_TORSO, xn_pos_torso);

KDL::Vector torso(joint_pos_torso.position.X, joint_pos_torso.position.Y, joint_pos_torso.position.Z);

// Get torso rpy rotation

XnSkeletonJointOrientation xn_orient_torso;

UserGenerator.GetSkeletonCap().GetSkeletonJointOrientation(user, XN_SKEL_TORSO, xn_orient_torso);

m = xn_orient_torso.orientation.elements;

KDL::Rotation torso_rotation(m[0], m[1], m[2], m[3], m[4], m[5], m[6], m[7], m[8]);

//Get right shoulder xyz position

XnSkeletonJointPosition xn_pos_r_shoulder;

UserGenerator.GetSkeletonCap().GetSkeletonJointPosition(user, XN_SKEL_RIGHT_SHOULDER, xn_pos_r_shoulder);

KDL::Vector right_shoulder(xn_pos_r_shoulder.position.X, xn_pos_r_shoulder.position.Y, xn_pos_r_shoulder.position.Z);

// Get left shoulder xyz position

XnSkeletonJointPosition xn_pos_l_shoulder;

UserGenerator.GetSkeletonCap().GetSkeletonJointPosition(user, XN_SKEL_LEFT_SHOULDER, xn_pos_l_shoulder);

KDL::Vector left_shoulder(xn_pos_l_shoulder.position.X, xn_pos_l_shoulder.position.Y, xn_pos_l_shoulder.position.Z);

// Get left shoulder rpy rotation

XnSkeletonJointOrientation xn_orient_l_shoulder;

UserGenerator.GetSkeletonCap().GetSkeletonJointOrientation(user, XN_SKEL_LEFT_SHOULDER, xn_orient_l_shoulder);

m = xn_orient_l_shoulder.orientation.elements;

KDL::Rotation left_shoulder_rotation(m[0], m[1], m[2], m[3], m[4], m[5], m[6], m[7], m[8]);

//Get left elbow xyz position’

XnSkeletonJointPosition xn_pos_l_elbow;

UserGenerator.GetSkeletonCap().GetSkeletonJointPosition(user, XN_SKEL_LEFT_ELBOW, xn_pos_l_elbow);

KDL::Vector left_elbow(xn_pos_l_elbow.position.X, xn_pos_l_elbow.position.Y, xn_pos_l_elbow.position.Z);

// Get left hand xyz position

XnSkeletonJointPosition xn_pos_l_hand;

UserGenerator.GetSkeletonCap().GetSkeletonJointPosition(user, XN_SKEL_LEFT_HAND, xn_pos_l_hand);

KDL::Vector left_hand(xn_pos_l_hand.position.X, xn_pos_l_hand.position.Y, xn_pos_l_hand.position.Z);

// We need this inverse rotation to correct all of the other joints’ XYZ and RPY into a torso relative space.

KDL::Rotation torso_rotation_inverse = torso_rotation.Inverse();

// Right shoulder position – remove torso rotation and translation

right_shoulder = right_shoulder – torso;

right_shoulder = torso_rotation_inverse * right_shoulder;

// Left shoulder rotation – remove torso rotation and extract angles

left_shoulder_rotation = left_shoulder_rotation * torso_rotation_inverse;

double left_shoulder_roll, left_shoulder_pitch, left_shoulder_yaw;

left_shoulder_rotation.GetRPY(left_shoulder_roll, left_shoulder_pitch, left_shoulder_yaw);

// Left shoulder position – remove torso rotation and translation

left_shoulder = left_shoulder – torso;

left_shoulder = torso_rotation_inverse * left_shoulder;

// Left elbow position – remove torso rotation and translation

left_elbow = left_elbow – torso;

left_elbow = torso_rotation_inverse * left_elbow;

// Left hand position – remove torso rotation and translation

left_hand = left_hand – torso;

left_hand = torso_rotation_inverse * left_hand;

// Now all of our data considers their (X,Y,Z) and (R,P,Y) to have their (0,0,0) at the torso rather than at the Kinect camera.

// These coordinates are now ready to process to create useful joint angles to send to a robot.

// The following vectors are normalized so that when we create triangles out of them we can treat them as being within the unit circle, and the trigonometry is simplified.

// These first two vectors have the shoulder as the origin. Useful for calculating left shoulder pitch and roll

KDL::Vector left_shoulder_right_shoulder = right_shoulder – left_shoulder;

left_shoulder_right_shoulder.Normalize();

KDL::Vector left_shoulder_elbow = left_elbow – left_shoulder;

left_shoulder_elbow.Normalize();

// These last two vectors have the elbow as the origin. Useful for calculating the elbow angle.

KDL::Vector left_elbow_shoulder = left_shoulder – left_elbow;

left_elbow_shoulder.Normalize();

KDL::Vector left_elbow_hand = left_hand – left_elbow;

left_elbow_hand.Normalize();

static double robot_left_shoulder_angle_roll = 0;

if (xn_pos_r_shoulder.fConfidence >= 0.9 && xn_pos_l_elbow.fConfidence >= 0.9 && xn_pos_l_shoulder.fConfidence >= 0.9)

{

// Calculate the angle between two vectors. By comparing the left elbow, left shoulder, and right shoulder positions, we can get the inner angle.

robot_left_shoulder_angle_roll = acos(KDL.dot(left_shoulder_elbow, left_shoulder_right_shoulder));

// Shift the angle to consider arms down as zero position

robot_left_shoulder_angle_roll = robot_left_shoulder_angle_roll – HALFPI;

}

static double robot_left_shoulder_angle_pitch = 0;

// static: if a confidence check fails, previous iteration’s value is used.

if (xn_pos_l_shoulder.fConfidence >= 0.9 && xn_pos_l_elbow.fConfidence >= 0.9)

{

// Remember, Y from the Kinect coordinate system is actually Z on the robot.

// This considers a triangle where the hypotenuse is your arm.

// We want to take the inverse sign of the forward component of your arm to calculate the angle that we should send to the robot’s pitch joint.

robot_left_shoulder_angle_pitch = asin(left_shoulder_elbow.y());

// Shift the angle to consider arms down as zero position

robot_left_shoulder_angle_pitch = robot_left_shoulder_angle_pitch + HALFPI;

}

// left shoulder yaw

static double left_shoulder_angle_yaw = 0;

if (xn_pos_l_shoulder.fConfidence >= 0.9)

{

// In this case, we can take the rotation directly from the Kinect data.

// However, this method isn’t very reliable.

// The rotational information for joints frmo NITE is very unreliable.

// In certain positions of the user’s arms, this method will fail.

// I suggest that you try to make a better trigonometric solution.

// Remember, robot yaw = kinect roll because robot Z = kinect Y

left_shoulder_angle_yaw = left_shoulder_roll;

}

static double robot_left_elbow_angle = 0;

if (xn_pos_l_hand.fConfidence >= 0.9 && xn_pos_l_elbow.fConfidence >= 0.9 && xn_pos_l_shoulder.fConfidence >= 0.9)

{

// calculate the angle between two vectors.

// by comparing the left shoulder, left elbow, and left hand positions, we can get the inner angle

robot_left_elbow_angle = acos(KDL.dot(left_elbow_hand, left_elbow_shoulder));

// Shift the angle to consider arms down as zero position

robot_left_elbow_angle = left_elbow_angle – PI;

}

}

}

Reference library used for program was KDL and can be found here: https://github.com/orocos/orocos_kinematics_dynamics/blob/master/orocos_kdl/src/frames.hpp

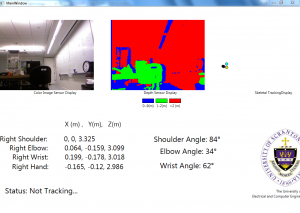

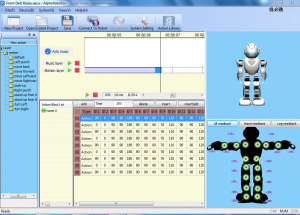

Below is photos of the robot program and Kinect program running: